Why software testing just became the most important skill in technology

Ash Gawthorp, Ten10’s Chief Technology Officer, discusses why the recent rise of AI agents proves the lasting importance of software testing skills in the modern tech workforce

If you’re working in technology right now and you feel a bit lost—like everyone around you has figured out this AI thing while you’re still trying to understand what MCP stands for, or whether you should be using agents, or how to keep ChatGPT from making things up—you’re not alone.

In fact, you’re in remarkably good company.

The admission that validated an industry’s anxiety

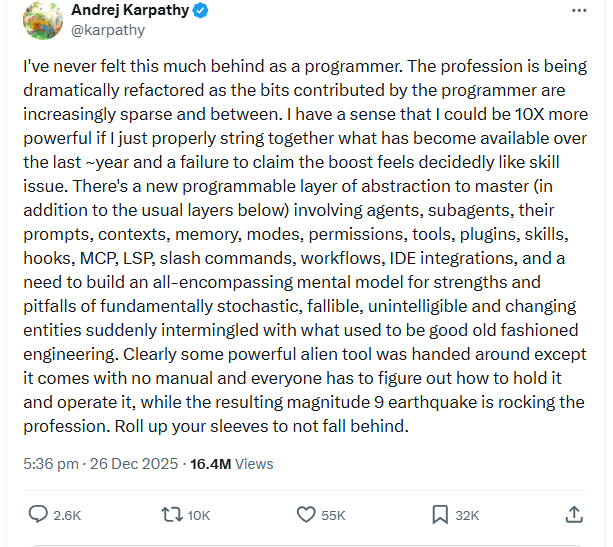

In late December 2025, a post on X (formerly Twitter) immediately went viral, garnering over 55,000 likes, 10,000 retweets, and 16.4 million views. The message sparked intense discussions across the entire tech industry because of both what it said and who said it.

The message was this:

The person who wrote this? Andrej Karpathy.

For those unfamiliar with the name, Andrej Karpathy is not just some developer having imposter syndrome. He is:

- A founding member of OpenAI (2015-2017), where he worked on the fundamental AI research that led to GPT and other breakthrough models.

- Former Director of AI at Tesla (2017-2022), where he led the computer vision team that built one of the world’s most advanced self-driving AI systems, now deployed in millions of cars.

- PhD from Stanford under legendary AI researcher Fei-Fei Li.

- Designer and primary instructor of Stanford’s CS231n course—the first deep learning class at Stanford, which became one of its most popular courses and trained an entire generation of AI researchers.

- Named to MIT Technology Review’s Innovators Under 35 (2020) and TIME Magazine’s 100 Most Influential People in AI (2024).

- Currently founder of Eureka Labs, an AI+education company.

In other words: Karpathy didn’t just observe the AI revolution—he helped build it. He was there at the beginning of OpenAI. He architected Tesla’s autonomous driving neural networks. He literally taught deep learning to thousands of Stanford students. He popularised the concept of “Software 2.0″—the idea that neural networks are becoming the new way we write code.

And now, in 2026, even he feels behind.

His description of AI as “some powerful alien tool…with no manual” and the transformation as a “magnitude 9 earthquake” captures perfectly the disorientation that everyone—from junior developers to elite AI researchers—is experiencing right now.

What happened in the comments under Karpathy’s post was extraordinary. Thousands of developers, engineers, CTOs, and technical leaders essentially said: “Thank God someone said it. I thought it was just me.”

One comment summarised it perfectly: “Karpathy sounds like a gaslighting victim. First the AI bros tell us we’re close to AGI and the Bots are at PhD level. Then, when the tools randomly break it’s our fault for not instructing them correctly.”

The feeling is universal because the shift is real. This isn’t about individuals falling behind—it’s about the entire profession being fundamentally refactored in real-time.

The phase transition: From authorship to orchestration

What Karpathy is describing, and what everyone is feeling, is a profound “phase transition” in technical leverage.

For decades, software engineering was defined by deterministic authorship: you wrote exact instructions, and machines executed them identically every time. If your code compiled and ran, you knew exactly what it would do. Trust was inherent in the system.

Now we’ve entered an era defined by orchestrating probabilistic intelligence. Large language models are stochastic token generators rather than logic-based programs. Their internal reasoning cannot be “single-stepped” or fully inspected. You can ask the same question twice and get different answers. Sometimes the model makes mistakes. Sometimes it misunderstands context. Sometimes it just hallucinates entirely plausible falsehoods.

In this new world, trust is no longer inherent—it must be engineered.

And that’s where the opportunity for software testers becomes extraordinary.

Level 1: Conditioning and steering = Test case design

The AI challenge

Karpathy describes needing to master “agents, subagents, their prompts, contexts, memory, modes, permissions, tools, plugins, skills” as part of this new layer. Breaking this down into practical capabilities:

- Intent specification: Ambiguity causes models to hallucinate. You must develop tight problem contracts with specific definitions and constraints.

- Context engineering: Managing what material enters the context window—the “new IO and databases” of the AI stack.

- Constraint design: Without constraints like schemas, rubrics, and stop conditions, a probabilistic system is merely a “slot machine.”

This is test case design

Look at what’s actually being described here:

- Intent specification = Writing test requirements with zero ambiguity

- Context engineering = Managing test data, fixtures, and environmental conditions

- Constraint design = Defining acceptance criteria and boundary conditions

Testers have always been the people who look at a vague requirement like “the system should be fast” and say, “Fast how? Under what conditions? What’s acceptable? What’s the failure threshold?”

That skill—eliminating ambiguity—has always been the tester’s superpower. In deterministic code, developers could sometimes get away with fuzzy specifications because the code would at least behave consistently. In probabilistic systems, ambiguity is catastrophic. You’re not just risking bugs—you’re risking complete nonsense.

The foundational skill of AI engineering is actually the foundational skill of software testing: making the implicit explicit.

Level 2: Preserving Authority = The Testing Function Itself

The AI challenge

In traditional software, the person who wrote the code naturally held authority over it. You could trace every line, understand every decision. In the age of AI, this “chain of custody” is broken. The system is now required to:

- Verify outputs: Since models produce “plausible falsehoods,” you must design explicit verification mechanisms—some deterministic (like unit tests) and some procedural (like human review).

- Establish provenance: Systems must provide traceability—citations, retrieved documents, audit trails that justify the model’s claims.

- Enforce permissions: Models should never be a security boundary; permissions must be deterministic and based on least privilege.

This is literally testing

This level is entirely the testing discipline:

- Verification design – Karpathy explicitly calls out “unit tests” as deterministic verification. This is the core testing function: deciding what to verify, how to verify it, and when verification can be automated versus requiring human judgment.

- Provenance – Test traceability and audit trails have always been quality engineering concerns. Being able to say “this requirement was tested by these test cases, and here’s the evidence” is Testing 101.

- Permission envelopes – This is security testing and authorization validation—ensuring systems respect boundaries.

The phrase “plausible falsehoods” is brilliantly descriptive. It’s the AI equivalent of bugs that pass through traditional testing because they look correct. Testers have always been the discipline that understands “it compiled” doesn’t mean “it works,” and “it works” doesn’t mean “it’s correct.”

That distinction just became existential. Without explicit verification, AI systems are literally unusable.

Level 3: Workflow design = Test architecture

The AI challenge

Karpathy describes needing to master “workflows, IDE integrations” and build systems where “fundamentally stochastic, fallible, unintelligible and changing entities” are “intermingled with what used to be good old-fashioned engineering.”

This breaks down into:

- Decomposition: Breaking tasks into pipeline steps where failures remain local rather than global.

- Failure mode taxonomy: Debugging shifts from tracing code logic to classifying why a model failed (was the task underspecified? was retrieval wrong? did the model lack capability?).

- Observability: Because internal reasoning is opaque, the surrounding system must be made extremely legible through traces of tool calls and intermediate validations.

This Is test architecture

Every piece of this is systems testing and quality engineering at scale:

- Decomposition – Designing systems for testability: breaking complex systems into components with isolated failure modes, clear interfaces, and independent verification points. This is how you make large systems testable.

- Failure mode taxonomy – Root cause analysis and defect classification. Testers have always asked “why did this fail?” and built taxonomies (environmental issue? requirements defect? integration problem? data issue?) to make debugging systematic.

- Observability – Instrumenting systems for testability—adding logging, tracing, and monitoring so that failures can be diagnosed and reproduced. The insight that you can’t inspect internal reasoning, so you must make the surrounding system legible, is classic test architecture thinking.

When Karpathy describes the challenge of debugging systems where you can’t see inside the black box, he’s describing what testers have dealt with forever. When you can’t step through the code (because it’s a model, or because it’s proprietary, or because it’s too complex), you instrument what goes in and what comes out. You build harnesses. You create observability.

Level 4: Compounding leverage = Continuous quality

The AI challenge

Karpathy talks about the challenge of systems that are “constantly changing”—models that update, capabilities that shift, and the need to “not fall behind” in a profession experiencing a “magnitude 9 earthquake.”

This requires:

- Evaluation harnesses: Without “evals”—golden sets of examples or regression tests—changing a prompt or model is like playing “Russian roulette” with the system’s performance.

- Feedback loops: Getting the model to critique and revise its own work before final shipment.

- Drift management: Governing systems in a state of continuous change due to model updates and evolving requirements.

This is modern quality engineering

This is the entire modern quality engineering toolkit:

- Evaluation harnesses – Karpathy literally describes regression test suites, golden master testing, and benchmark sets. His “Russian roulette” metaphor is exactly what happens when you deploy without automated testing.

- Feedback loops – Continuous testing, quality gates in CI/CD, and shift-left testing practices that catch issues before production. Self-reviewing systems are just automated test-and-fix loops.

- Drift management – Production monitoring, synthetic testing, and quality metrics that track system behaviour over time as the world changes. This is the recognition that quality isn’t a one-time achievement—it’s a continuous practice.

This level acknowledges something testers have always known: shipping the software isn’t the end—it’s the beginning. Systems drift. Requirements change. The environment evolves. Quality is ongoing vigilance.

Three critical insights for testers

1. Everyone needs resting skills now

Karpathy’s post went viral because it articulated what everyone is feeling. But here’s what’s underneath that feeling: everyone now needs to do what testers have always done.

AI systems don’t just benefit from testing—they’re unusable without it: without verification mechanisms, AI systems are “slot machines”—unpredictable, unreliable, and dangerous.

Quality engineering hasn’t gone from “nice to have” to “important.” It’s gone from “important” to “table stakes for AI working at all.”

2. The build/test divide has collapsed

In the deterministic era, there was a clear division: some people built the system (wrote the code), and other people tested it (verified it worked). This created an organisational structure where testing was downstream from building.

That structure is dying.

When building itself becomes about orchestrating unreliable components, building and testing become the same activity. You’re not writing code that you know will work—you’re composing systems from parts that might work, then verifying they work, then monitoring whether they keep working.

Every engineer now needs testing skills. Every AI system needs quality engineering architecture. The distinction has collapsed.

3. Vendor independence requires verification capability

Karpathy describes needing to build “mental models for strengths and pitfalls” of these systems. Why? Because you can’t trust vendor claims.

When OpenAI says GPT-4 is “82% better” at something, or when vendors claim their model “rarely hallucinates,” what they mean is: under certain conditions, with certain prompts, on certain benchmarks, the performance improved. Will it work for your use case? You have no idea until you test it.

Organisations building AI capability without deep quality engineering expertise are taking vendors at their word. That’s not strategy—that’s faith.

The person who can design evaluation harnesses, build observability into agent workflows, create feedback loops that catch hallucinations before production, and establish provenance and verification mechanisms? That’s not just a tester anymore—that’s the most strategic role in the AI era.

The bottom line: You’re not behind—you’re ahead

Karpathy’s post resonated because everyone feels behind. The profession is being “dramatically refactored.” There’s a “magnitude 9 earthquake.” The tools are “powerful but come with no manual.”

But here’s what testers need to understand: The skills required for this new era are exactly the skills you’ve been building your entire career.

When Karpathy describes orchestrating uncertainty without losing authority, he’s not describing a new discipline. He’s describing software testing.

The phase transition in technical leverage isn’t creating something new. It’s revealing that the discipline of quality engineering—of building trust into unreliable systems—was always the hard part. We just didn’t notice because deterministic code made trust automatic.

Everyone feels behind because everyone needs to learn to think like a tester.

And testers? You’re not behind. You’ve been training for this moment for years. The rest of the industry is just now catching up to what you’ve always known:

- Trust must be verified

- Specifications must be precise

- Systems must be observable

- Quality must be continuous

- Uncertainty must be managed

The future belongs to people who can look at a probabilistic black box and say: “Here’s how we’ll verify it. Here’s how we’ll monitor it. Here’s how we’ll know when it’s drifting. And here’s how we’ll maintain trust at scale.”

That’s always been the tester’s job.

It’s just that now, finally, the entire industry realises that it’s the most important job there is.

Read more insights from our Chief Technology Officer by

Read more insights from our Chief Technology Officer by